What is a Data Lake ?

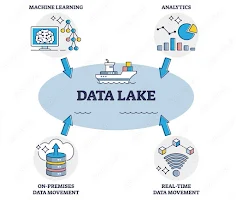

A data lake is a centralized repository that allows organizations to store all their structured and unstructured data at any scale. Unlike traditional data warehouses, which require data to be processed and structured before storage, a data lake accepts data in its raw format and applies structure only when it's needed for analysis or other tasks. This flexibility is one of the key advantages of data lakes, as it enables organizations to collect vast amounts of diverse data without worrying about the specific format or schema upfront.

Data lakes typically use a distributed file system, such as Hadoop Distributed File System (HDFS) or cloud-based storage solutions like Amazon S3 or Azure Data Lake Storage, to store data across multiple nodes or servers. This distributed architecture allows for horizontal scalability, meaning organizations can easily expand their data lake as their data volumes grow.

One of the primary goals of a data lake is to break down data silos within an organization by providing a unified and centralized repository for all types of data, including structured data from relational databases, semi-structured data like JSON or XML, and unstructured data such as text documents, images, and videos. By consolidating data in a single location, data lakes make it easier for organizations to perform advanced analytics, machine learning, and other data-driven tasks that require access to a wide variety of data sources.

However, while data lakes offer significant advantages in terms of flexibility and scalability, they also present challenges in terms of data governance, security, and data quality. Without proper management and oversight, data lakes can quickly become data swamps, filled with inconsistent, low-quality data that's difficult to analyze effectively. To avoid this pitfall, organizations must implement robust data governance policies and employ data management tools and practices to ensure data quality, security, and compliance with regulatory requirements.

A data lake is a powerful tool for organizations looking to harness the full potential of their data assets by providing a centralized repository for storing and analyzing diverse types of data at scale. However, successful implementation requires careful planning, effective data governance, and ongoing management to ensure that the data lake remains a valuable resource rather than a burdensome liability.

How to Build a Data Lake ?

Building a data lake involves several key steps to ensure its effectiveness, scalability, and usability:

1) Define Goals and Use Cases: Start by clearly defining the objectives and use cases for the data lake. Identify the types of data you want to store, the analytics you want to perform, and the business insights you aim to derive from the data.

2) Select a Storage Platform: Choose a storage platform that aligns with your organization's needs and infrastructure. This could be on-premises solutions like Hadoop Distributed File System (HDFS) or cloud-based options such as Amazon S3, Azure Data Lake Storage, or Google Cloud Storage. Consider factors like scalability, performance, cost, and integration with existing systems.

3) Design Data Ingestion Pipeline: Develop a robust data ingestion pipeline to efficiently ingest data from various sources into the data lake. This may involve batch processing, streaming data ingestion, or a combination of both. Use tools like Apache Kafka, Apache NiFi, or cloud-native services like AWS Glue or Azure Data Factory to streamline data ingestion processes.

4) Implement Data Governance: Establish data governance policies and procedures to ensure data quality, security, and compliance with regulatory requirements. Define data access controls, data lineage, metadata management, and data retention policies to govern data throughout its lifecycle in the data lake.

5) Organize Data Lake Architecture: Design the data lake architecture to accommodate different types of data, including structured, semi-structured, and unstructured data. Consider using a tiered storage approach to optimize cost and performance, with hot, warm, and cold storage tiers based on data access patterns and usage.

6) Enable Data Cataloging and Metadata Management: Implement a data catalog and metadata management system to catalog and index data stored in the data lake. This helps users discover, understand, and access relevant data assets for analysis and decision-making. Tools like Apache Atlas, AWS Glue Data Catalog, or Azure Data Catalog can facilitate metadata management.

7) Enable Data Processing and Analytics: Provide tools and frameworks for data processing and analytics within the data lake environment. This may include batch processing with Apache Spark, interactive querying with Apache Hive or Presto, and machine learning with frameworks like TensorFlow or PyTorch. Leverage cloud-based analytics services for scalable and cost-effective analytics capabilities.

8) Ensure Data Security and Compliance: Implement robust security controls to protect data stored in the data lake from unauthorized access, data breaches, and cyber threats. Use encryption, access controls, authentication mechanisms, and auditing capabilities to safeguard sensitive data. Ensure compliance with relevant data protection regulations such as GDPR, HIPAA, or CCPA.

9) Monitor and Optimize Performance: Continuously monitor the performance and health of the data lake infrastructure and workloads. Implement monitoring and logging solutions to track resource utilization, data access patterns, query performance, and system errors. Use this data to optimize resource allocation, tune configurations, and improve overall performance.

10) Provide Training and Support: Offer training and support to users and stakeholders to enable them to effectively utilize the data lake for their analytics and decision-making needs. Provide documentation, tutorials, and hands-on training sessions to empower users to extract actionable insights from the data lake.

Data Lake Tools

There are several tools and technologies available for building and managing data lakes. Here are some popular data lake tools across different categories:

1) Data Ingestion:

- Apache NiFi: An open-source data ingestion and distribution system that provides powerful and scalable capabilities for routing, transforming, and processing data from various sources.

- Apache Kafka: A distributed streaming platform that enables real-time data ingestion, messaging, and processing at scale.

- AWS Glue: A fully managed extract, transform, and load (ETL) service that simplifies data ingestion from various sources into AWS data lakes.

2) Storage:

- Hadoop Distributed File System (HDFS): A distributed file system that provides scalable and reliable storage for big data across clusters of commodity hardware.

- Amazon S3: A scalable object storage service offered by Amazon Web Services (AWS) that provides durable and cost-effective storage for data lakes.

- Azure Data Lake Storage: A scalable and secure cloud-based storage service provided by Microsoft Azure for storing big data in structured and unstructured formats.

3) Data Processing and Analytics:

- Apache Spark: A fast and general-purpose distributed computing engine for processing large-scale data sets with advanced analytics capabilities.

- Apache Hive: A data warehouse infrastructure built on top of Hadoop that provides SQL-like querying and data summarization capabilities for data stored in Hadoop.

- Presto: An open-source distributed SQL query engine that enables interactive querying and analysis of data across multiple data sources.

4) Metadata Management:

- Apache Atlas: A scalable and extensible metadata management solution for Hadoop that provides governance and discovery of data assets within the data lake.

- AWS Glue Data Catalog: A fully managed metadata repository and catalog service provided by AWS for managing and discovering data assets in AWS data lakes.

- Azure Data Catalog: A cloud-based metadata management service provided by Microsoft Azure for discovering, understanding, and managing data assets across the organization.

5) Data Governance and Security:

- Apache Ranger: A comprehensive security framework for Hadoop that provides centralized security administration, authorization, and auditing for data lakes.

- AWS Lake Formation: A service provided by AWS for setting up secure data lakes in AWS, including data cataloging, access controls, and data encryption.

- Azure Purview: A unified data governance service provided by Microsoft Azure for discovering, cataloging, and managing data assets across on-premises and cloud environments.

Data Lake Example

Here's a concise example of a data lake implementation:

A multinational e-commerce company sets up a data lake to centralize and analyze their vast data streams. They ingest data from various sources like website activity logs, customer transactions, and social media interactions. Using cloud-based storage like Amazon S3, they store raw data in its native format without predefined schemas. Analysts and data scientists then leverage this data for advanced analytics, such as customer segmentation, product recommendations, and demand forecasting. With robust data governance measures in place, including access controls and encryption, the company ensures data security and compliance. This data lake empowers the company to derive valuable insights, enhance customer experiences, and drive business growth.

Data Lake Architecture

Data lake architecture typically involves multiple layers and components designed to handle the ingestion, storage, processing, and analysis of large volumes of data from diverse sources. Here's an overview of a typical data lake architecture:

- Data sources, including structured and unstructured data, feed into the data lake.

- Ingestion tools like Apache NiFi or AWS Glue gather and process data from various sources.

- Raw data is stored in a distributed storage system such as Amazon S3 or Azure Data Lake Storage.

- Metadata management tools catalog and organize data for easy discovery and analysis.

- Data processing frameworks like Apache Spark or AWS EMR handle batch and real-time data processing.

- Analytics engines like Apache Hive or Presto enable querying and analysis of data lake contents.

- Security measures, including access controls and encryption, safeguard data lake assets.

- Data governance practices ensure data quality, compliance, and integrity throughout the data lifecycle.

- Integration with machine learning frameworks allows for advanced analytics and predictive modeling.

- Continuous monitoring and optimization ensure the efficiency and reliability of the data lake infrastructure.

.jpg)

Advantages of Data Lake

- Scalability: Data lakes can scale horizontally to accommodate massive volumes of data from various sources.

- Flexibility: They accept diverse data types and formats, allowing organizations to store raw data without predefined schemas.

- Cost-effectiveness: Cloud-based data lakes offer cost-effective storage solutions, especially for large-scale data storage.

- Advanced Analytics: Data lakes enable advanced analytics, including machine learning, predictive modeling, and real-time analytics.

- Data Exploration: They facilitate data exploration and discovery, empowering analysts to derive insights from raw data.

- Data Integration: Centralized data storage promotes data integration and eliminates data silos within organizations.

- Agility: Data lakes support agile data processing and analytics workflows, allowing organizations to respond quickly to changing business needs.

Disadvantages of Data Lake

- Data Quality: Without proper governance and oversight, data lakes can suffer from poor data quality, leading to inaccurate insights.

- Complexity: Building and managing data lakes require expertise in various technologies and disciplines, making it complex and challenging.

- Data Governance: Establishing effective data governance practices, including data security and compliance, can be daunting in data lake environments.

- Performance: Data lakes may experience performance issues, especially with ad-hoc querying and analytics on unstructured or semi-structured data.

- Integration Complexity: Integrating data from disparate sources into the data lake can be complex and time-consuming, requiring extensive data transformation and preprocessing.

- Data Silos: While data lakes aim to eliminate data silos, improper management can lead to the creation of new silos within the data lake itself.

- Overhead: Maintaining and managing a data lake infrastructure incurs overhead costs, including infrastructure provisioning, monitoring, and optimization.

Data Lake vs Data Warehouse

Here are the key differences between a data lake and a data warehouse:

|

Aspect

|

Data Lake

|

Data Warehouse

|

|

Data Structure

|

Stores structured, semi-structured, and unstructured data in its raw

format without requiring schema upfront.

|

Stores structured data in a predefined schema optimized for querying

and analysis.

|

|

Data Type

|

Accepts diverse data types including text, images, videos, JSON, XML,

etc.

|

Primarily handles structured data from relational databases.

|

|

Data Processing

|

Supports both batch and real-time data processing.

|

Primarily optimized for batch processing.

|

|

Schema Flexibility

|

Schema-on-read approach - applies schema only when data is accessed.

|

Schema-on-write approach - requires data to be structured and

predefined before loading.

|

|

Scalability

|

Horizontally scalable to handle large volumes of data.

|

Vertically scalable for structured data processing.

|

|

Cost

|

Generally more cost-effective for storing large volumes of raw data.

|

May be more expensive due to the need for predefined schemas and

optimization for querying.

|

|

Use Cases

|

Suited for exploratory analytics, data discovery, and machine

learning.

|

Ideal for business intelligence, reporting, and decision support.

|

|

Data Governance

|

Requires robust governance practices to manage data quality, security,

and compliance.

|

Typically includes built-in governance features for managing data

quality, security, and compliance.

|

.jpg)